Shantanu Ghosh

Ph.D. Candidate

Department of Electrical and Computer Engineering

Boston University

407-07, Photonics Center

8 St Mary's St, Boston, MA 02215

shawn24@bu.edu

Interested in: robustness, interpretability, radiology report generation (RRG).

Pic courtesy: My wife, Payel Basak

Bio

I am a lifelong proud gator and a Ph.D. candidate in Electrical Engineering at Boston University, advised by Prof. Kayhan Batmanghelich at Batman Lab and Dr. Clare B. Poynton from Boston University Medical Campus. Before our lab moved to Boston, I was a Ph.D. student in the Intelligent Systems Program (ISP) at the University of Pittsburgh. While at Pitt, I used to collaborate closely with Dr. Forough Arabshahi from Meta, Inc. At Pitt, I was also a cross-registered student at Carnegie Mellon University, where I registered for the courses Foundations of Causation and Machine Learning (PHI 80625) and Visual Learning and Recognition (RI 16-824). In the summers of 2024 and 2025, I interned as an Applied Scientist at Amazon (AWS SAAR and AWS Optimus) in New York City (NYC) and Pasadena (CA). Before that, I graduated with a Master's degree in Computer Science from the University of Florida.

Background

My research focuses on robustness and generalization in deep learning, with a particular emphasis on leveraging vision-language representations to understand, explain, and audit pre-trained neural networks. My work has been published at top peer-reviewed venues, including ACL, NAACL, ICML, MICCAI, AMIA, JAMIA, and RAD: AI. I believe that understanding a model’s behavior is essential to mitigating bias and building trust in AI systems. At UF, I was fortunate to work as a graduate assistant in Data Intelligence Systems Lab (DISL) lab under the supervision of Prof. Mattia Prosperi and Prof. Jiang Bian where I conducted research on the intersection of deep learning and causal inference. I also worked closely with Prof. Kevin Butler as a Graduate Research Assistant at the Florida Institute of Cybersecurity (FICS) Research. At Amazon, I developed methods for learning robust representations in self-supervised models and auditing biases in AI coding agents.

Research

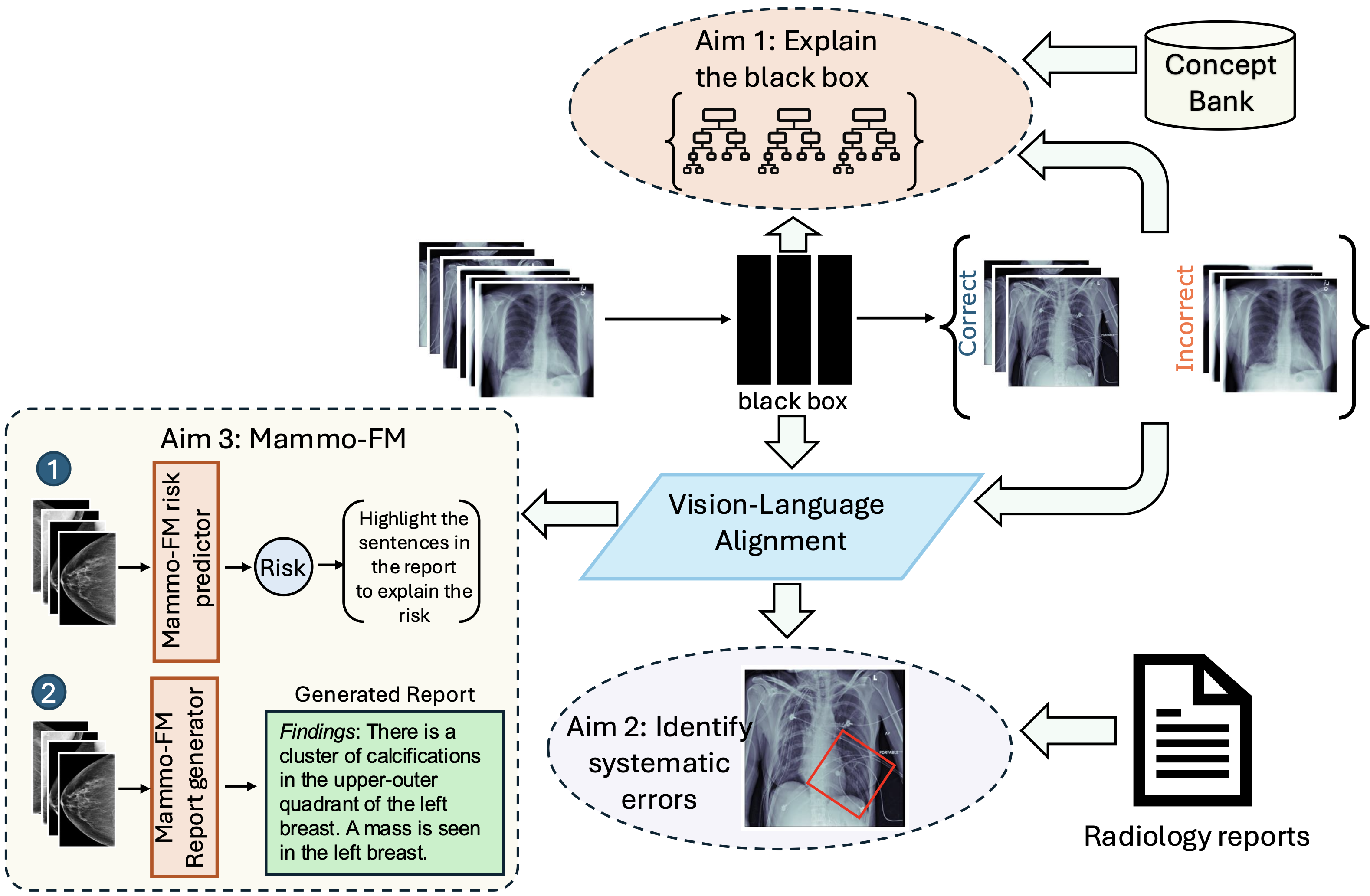

I aim to develop medical imaging AI systems from first principles: systems that do not merely achieve a high benchmark performance, but that support clinically meaningful reasoning, expose their limitations, and earn trust through transparency. I view scale as a powerful tool, but not a complete solution. Large pretrained models can encode rich representations, yet they can also exhibit systematic mistakes driven by spurious correlations. My research studies how to detect these failure modes, diagnose their causes, and design interventions that improve reliability and robustness in real clinical settings. I organize my research along two pillars:

- Algorithms: interpretable and trustworthy ML via vision–language alignment and causal reasoning, enabling failure mode discovery of pre-trained blackboxes using free-form clinical language.

- Applications: breast imaging foundation models, where I study the benefits of domain-specific vision–language over generalist models for cancer detection, prognosis, and radiology report generation.

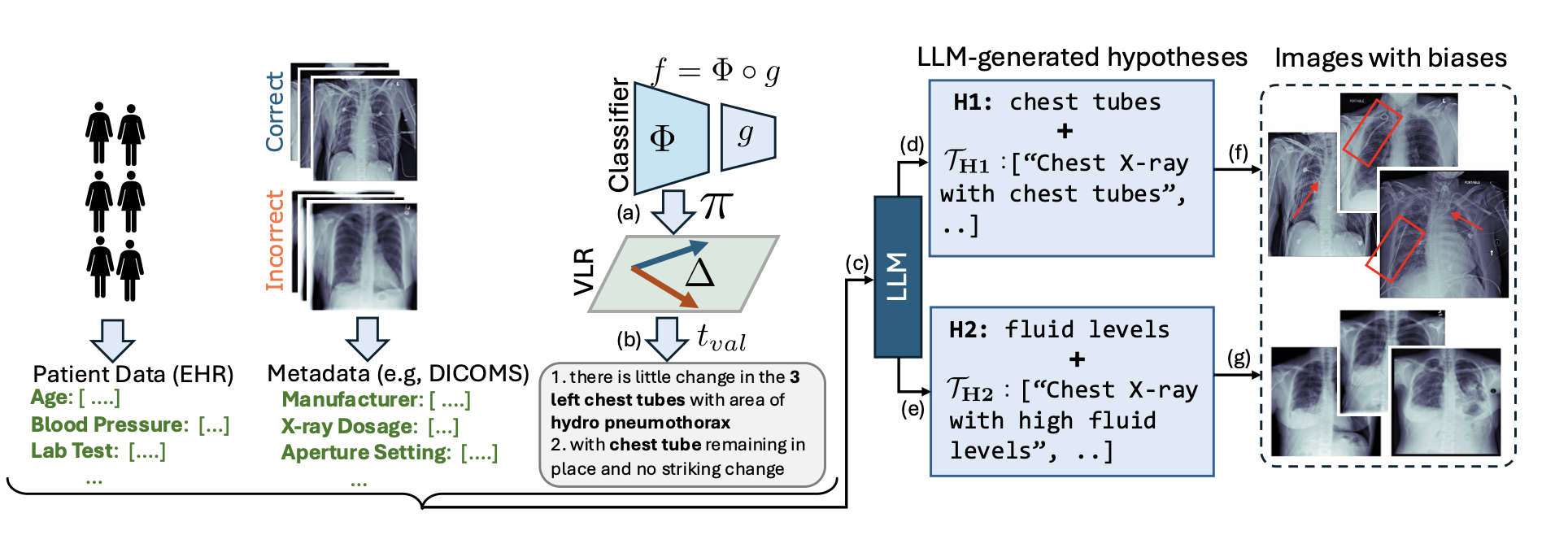

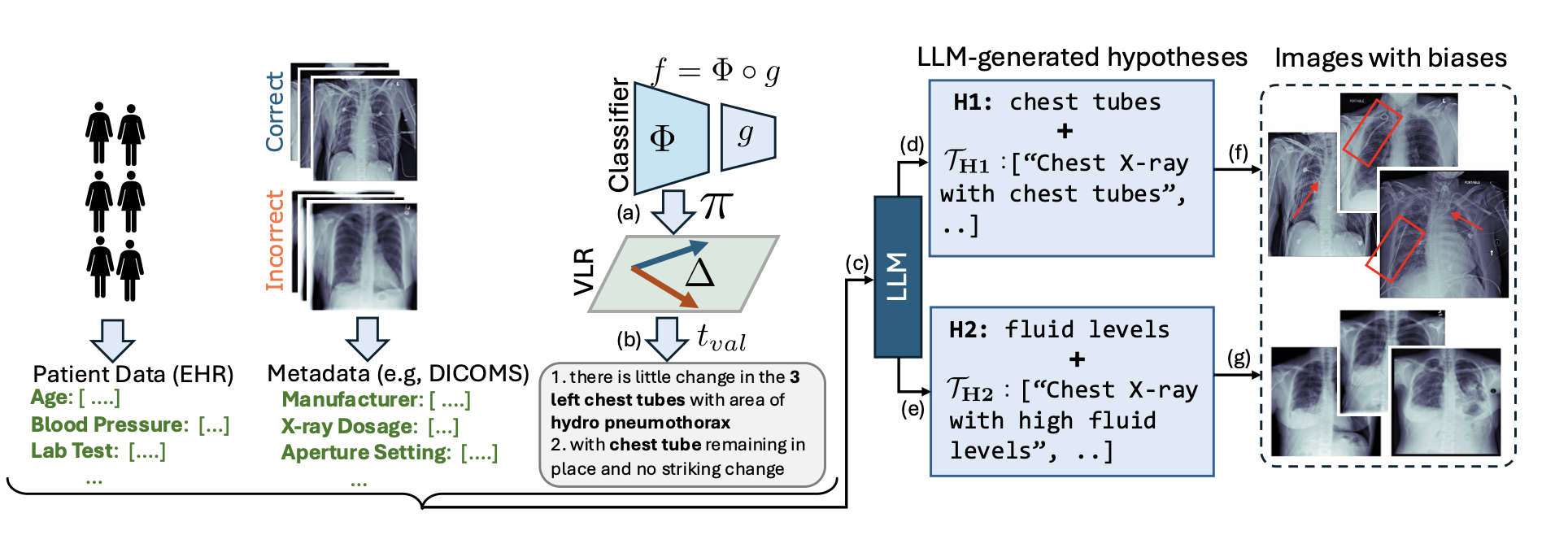

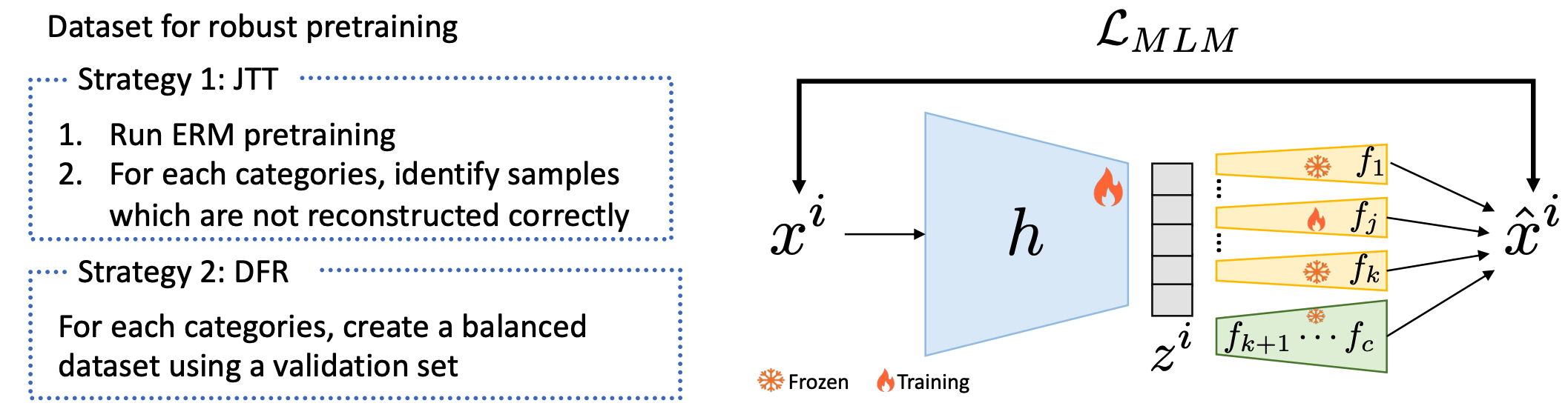

- Can we decipher the failure modes of a deep model through multimodal vision-language representations and large language models (LLMs) for improved reliability and debugging? ([LADDER (ACL 2025)])

- Can we learn robust representations in presence of multiple biases for tabular data? [Amazon internship work (TRL workshop@NeurIPS 2024)]

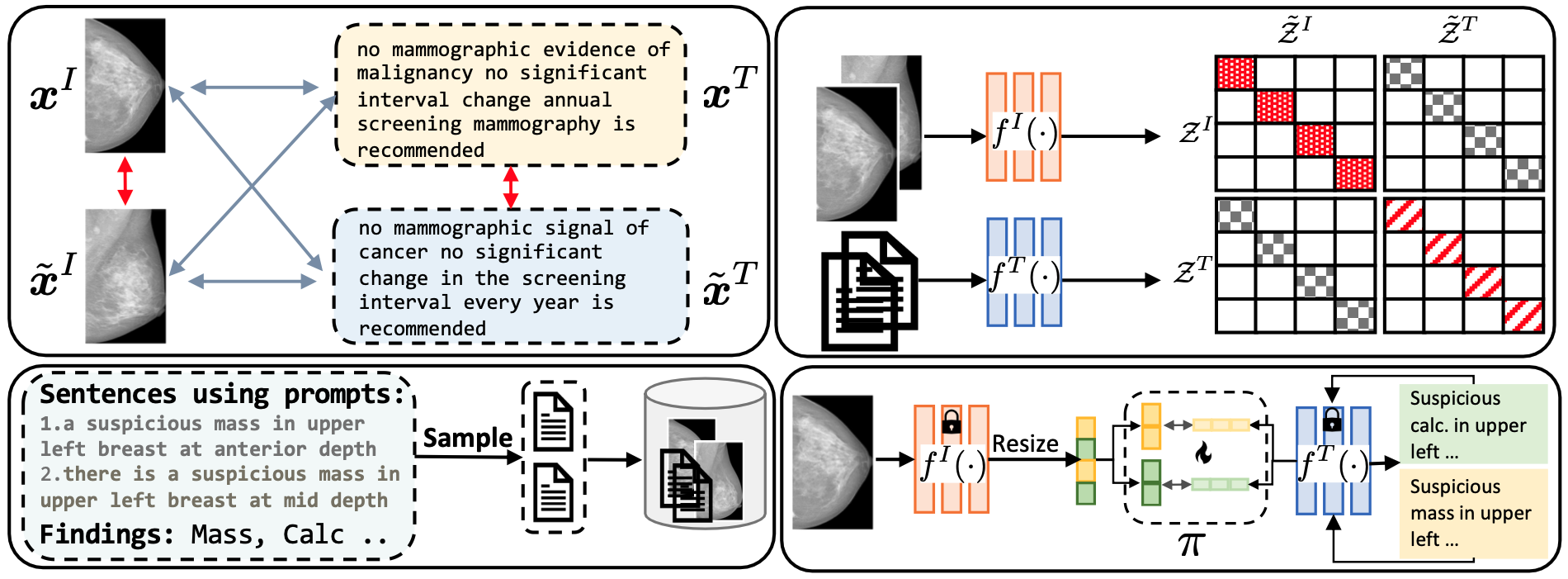

- Can we build robust foundation models for breast cancer detection and radiology report generation? [Mammo-CLIP (MICCAI 2024, top 11%) + Mammo-FM (ArXiv, 2025)]

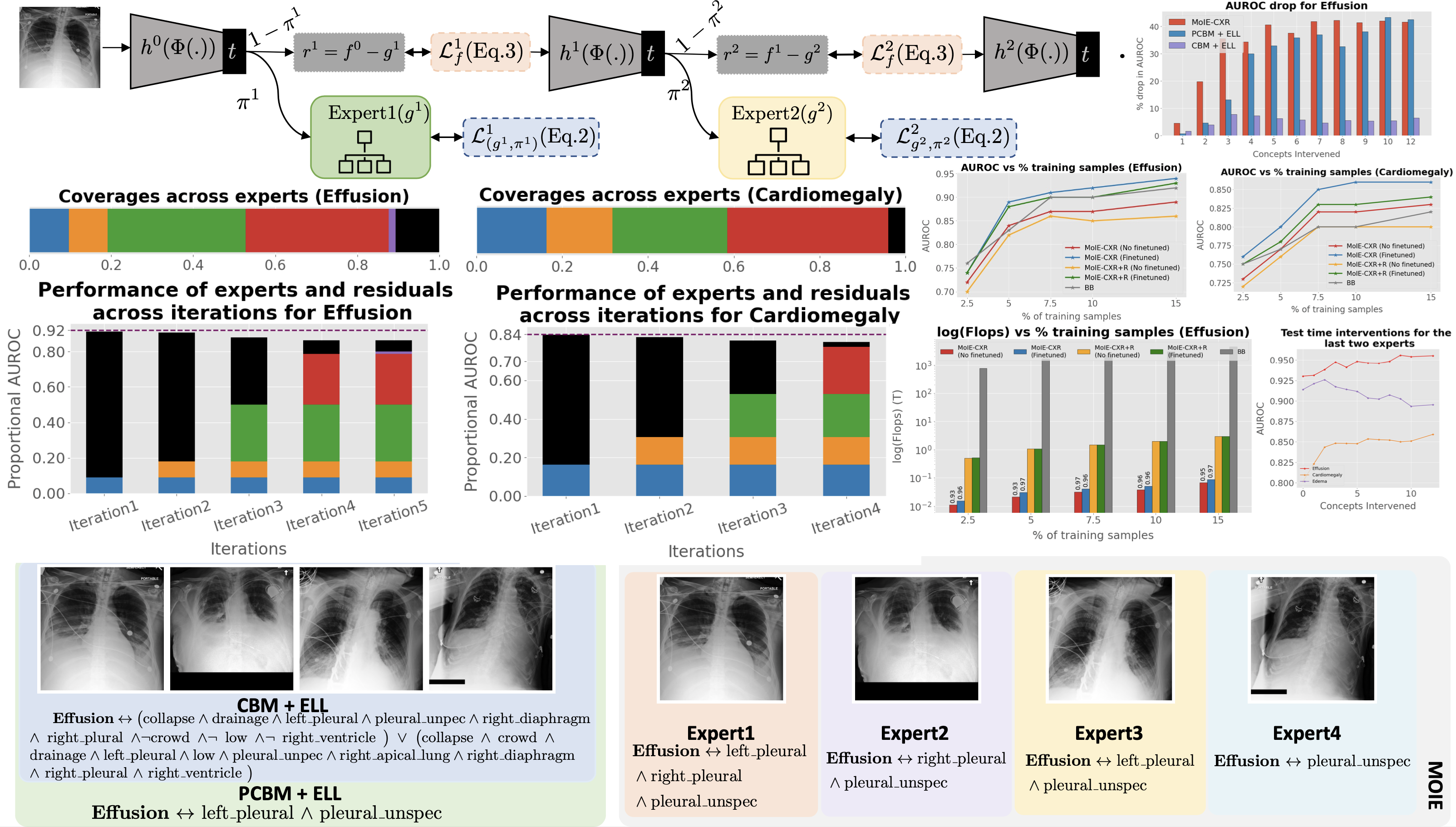

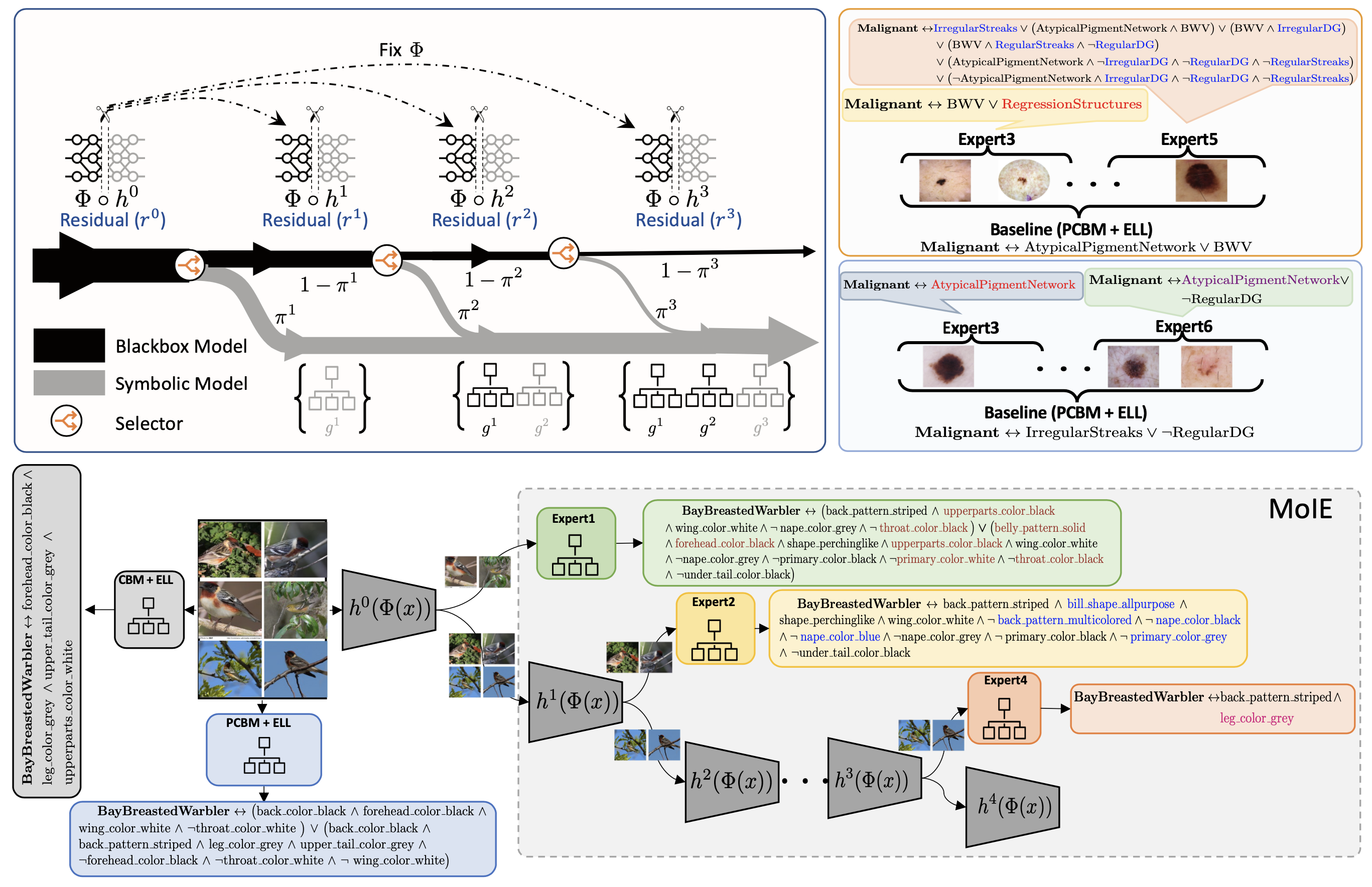

- Can we extract symbolic rules from the representation of a blackbox model using human interpretable concepts? [MoIE (ICML 2023 + SCIS@ICML 2023)]

- Can we use robust mixture of interpretable models for data and computationally efficient transfer learning? [MoIE-CXR (MICCAI 2023, top 14% + IMLH@ICML 2023)]

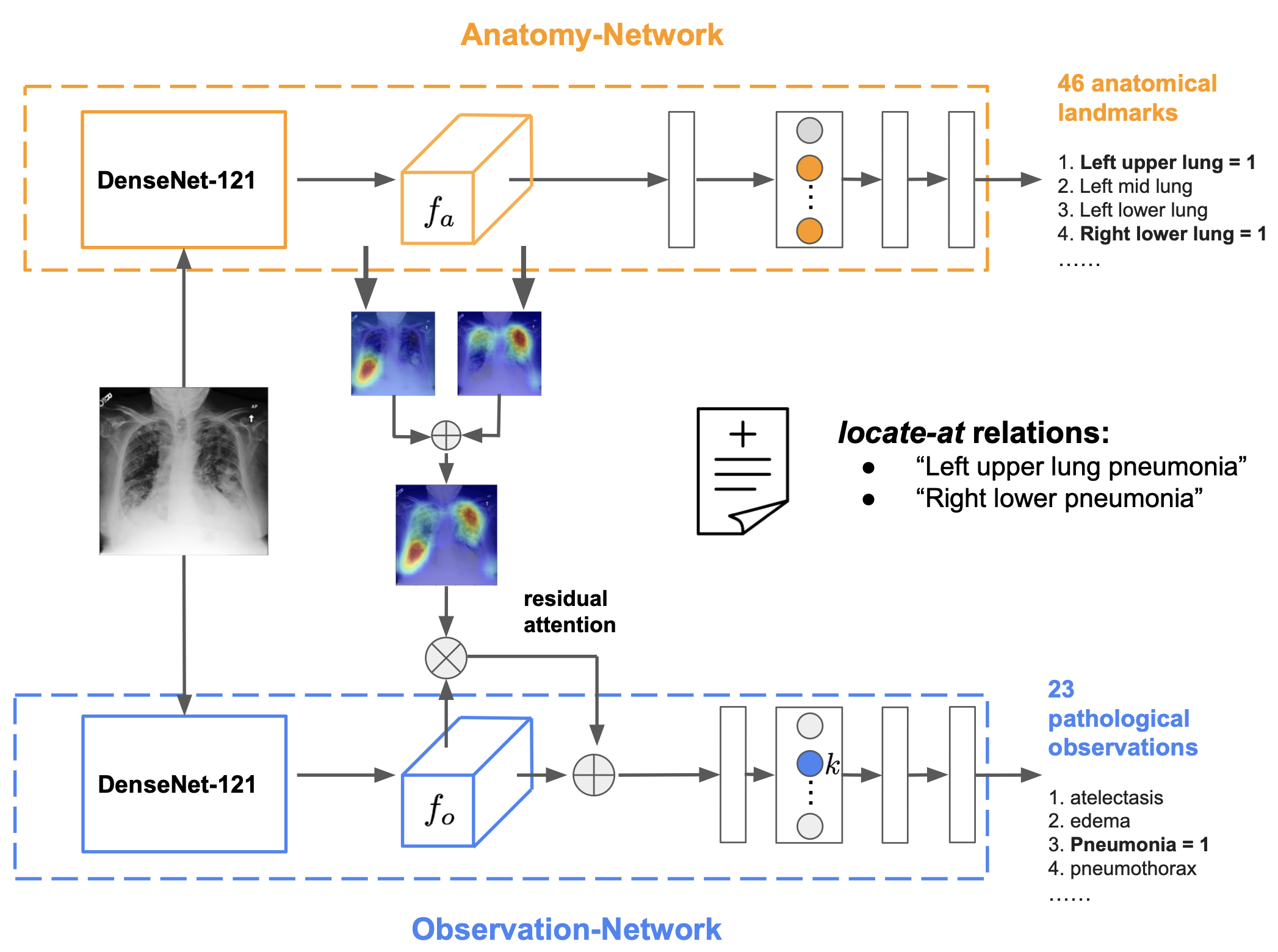

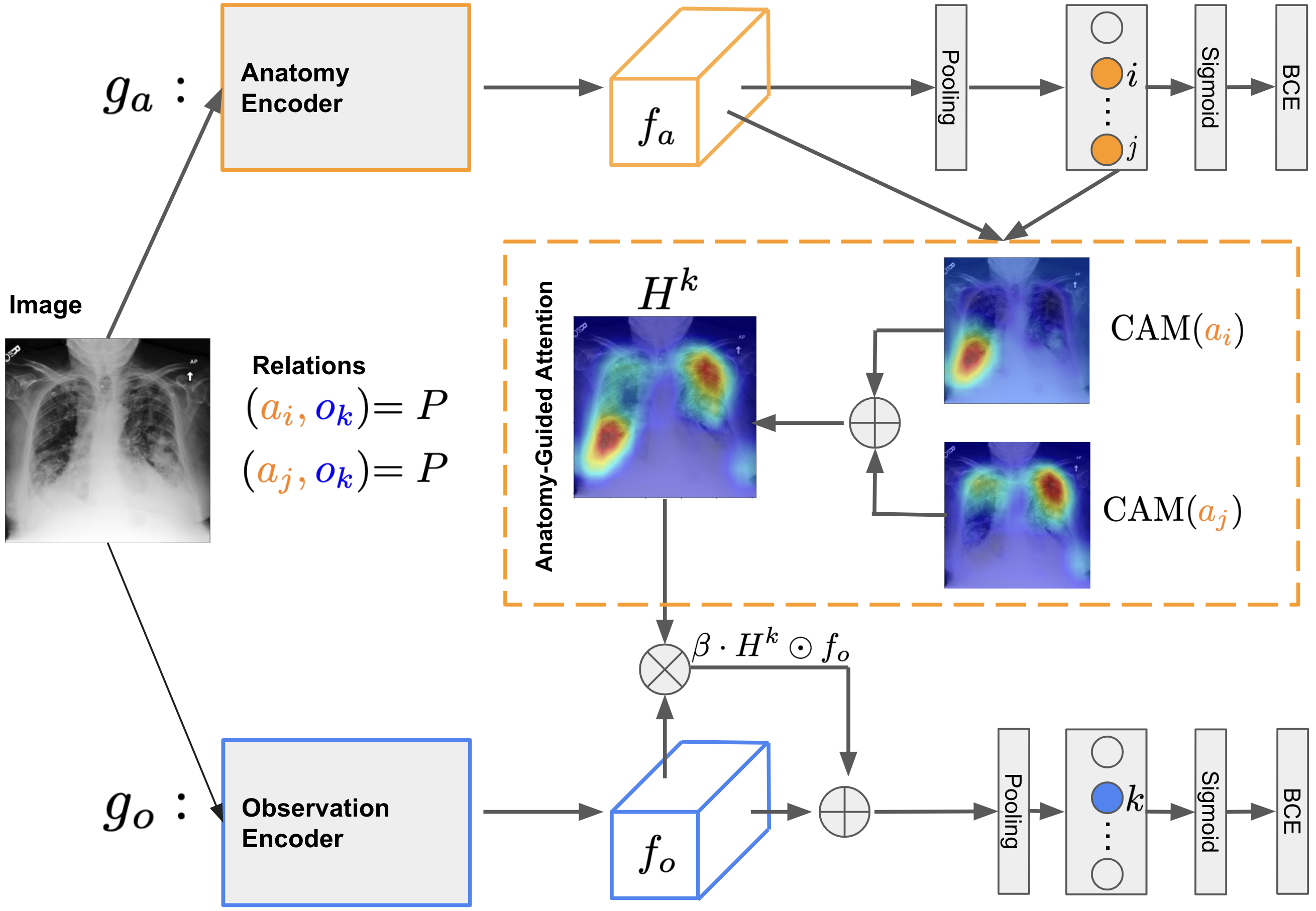

- Can we leverage radiology reports localizing a disease and its progression without ground-truth bounding box annotation? [AGXNet (MICCAI 2022 + RAD: AI)]

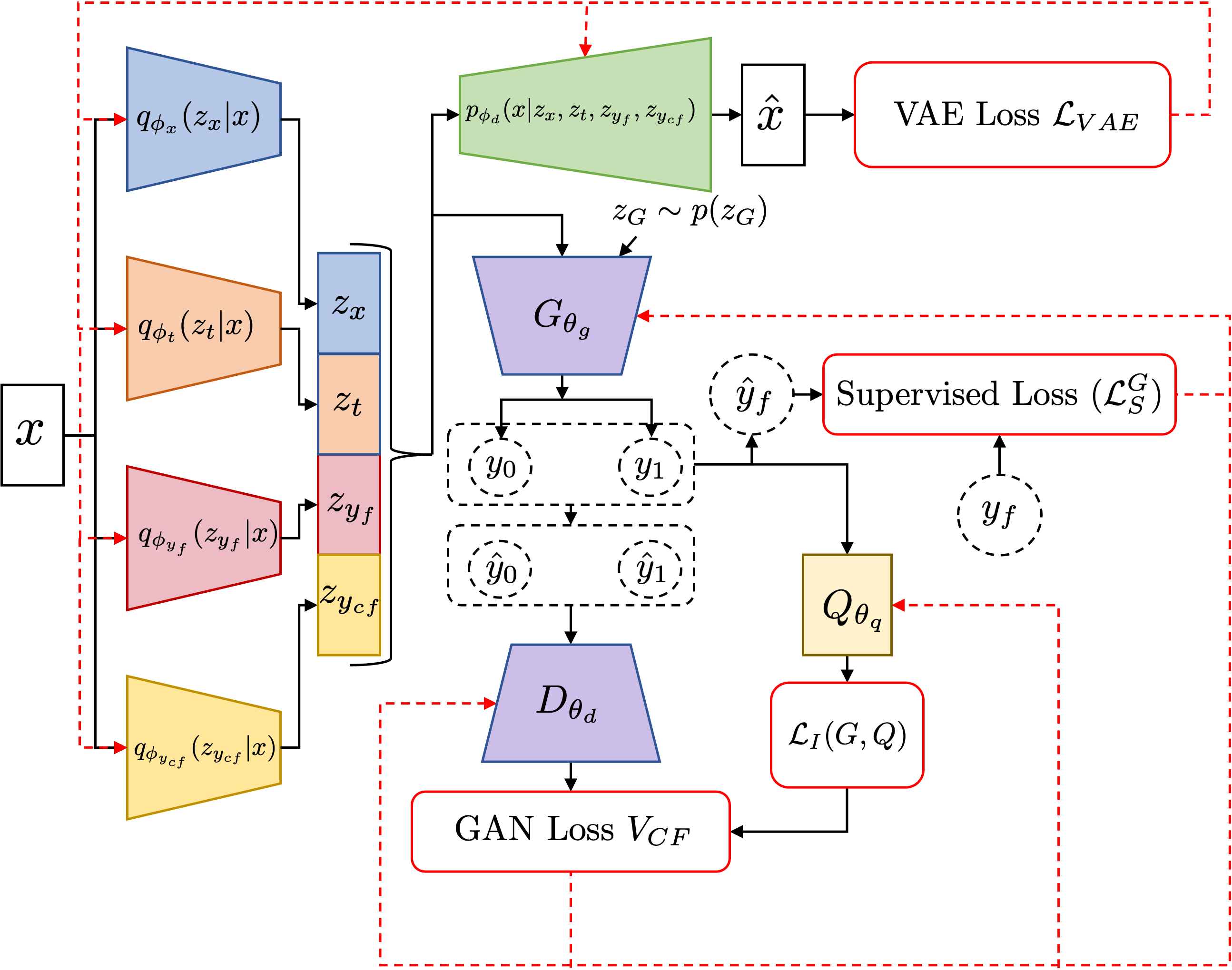

At UF, I was interested broadly in biomedical informatics with a focus on causal inference. I developed deep learning models, namely DPN-SA (JAMIA 2021), PSSAM-GAN (CMPB-U 2021) and DR-VIDAL (AMIA 2022, oral), to compute propensity scores for the efficient estimation of individual treatment effects (ITE). For a detailed overview of my Master's research, refer to the slides available at this link.

Deep Learning Resources

My friend Kalpak Seal and I have developed a comprehensive repository where you can access a curated collection of academic lecture videos focused on machine learning, deep learning, computer vision, and natural language processing (NLP). If you're interested in contributing to this resource, feel free to collaborate with us by submitting a pull request. Whether it's adding new lecture videos or improving the existing structure, we welcome all contributions!

News

- [Jan 2026] Defended my PhD Proposal today successfully. Here are the slides.

- [May 2025] Ladder is accepted at ACL 2025. Using LLM, Ladder detects the blind spots of deep learning classifiers where it makes systematic mistakes. Code is available here. I'm also joining as an Applied Scientist Intern at Amazon AWS Optimus Team in Pasadena, CA under the supervision of Dr. Ankan Bansal.

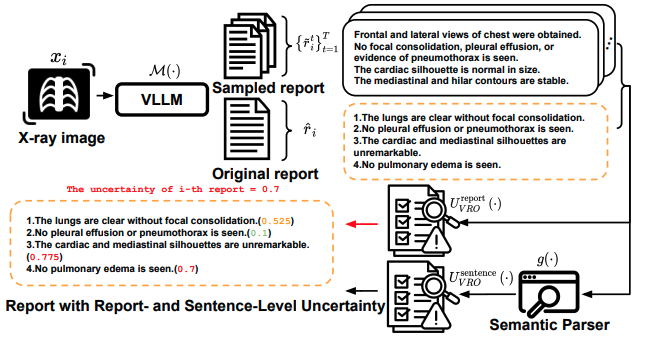

- [Jan 2025] Our collaborative work to reduce hallucination for CXR report generation is accepted at NAACL 2025 Findings.

- [Oct 2024] My internship work at Amazon on representation learning on tabular data is accepted at 3rd Table Representation Learning Workshop @ NeurIPS 2024. I am also recognized as a top reviewer at NeurIPS 2024.

- [Aug 2024] Our collaborative work Anatomy-specific Progression Classification in Chest Radiographs via Weakly Supervised Learning is accepted at Radiology: Artificial Intelligence. Code and checkpoints are available here.

- [Jun 2024] I'm joining as an Applied Scientist Intern at Amazon Web Services (AWS) Security Analytics and AI Research (SAAR) Team in New York City under the supervision of Dr. Mikhail Kuznetsov. My project aims at learning robust representations to mitigate systematic errors in self-supervised models.

- [May 2024] Mammo-CLIP is accepted (Early accept, top 11% out of 2,869 submissions) at MICCAI 2024. It is the first vision language model trained with mammogram+report pairs of real patients. Code and checkpoints are available here.

- [Jun 2023] I'm now a Ph.D. candidate. Also, two papers are accepted at SCIS and IMLH workshops at ICML 2023.

- [May 2023] Our work Distilling BlackBox to Interpretable models for Efficient Transfer Learning is accepted (Early accept, top 14% out of 2,250 submissions) at MICCAI 2023.

- [Apr 2023] Our work Dividing and Conquering a BlackBox to a Mixture of Interpretable Models: Route, Interpret, Repeat is accepted at ICML 2023.

- [Dec 2022] I'm joining Boston University in Spring 2023 in the Department of Electrical and Computer Engineering following my advisor's move. My research will be supported by Doctoral Research Fellowship.

- [Jun 2022] Our work on doubly robust estimation of ITE is accepted as an oral presentation at the AMIA 2022 Annual Symposium.

- [Jun 2022] Our work on weakly supervised disease localization is accepted at MICCAI 2022.

- [Aug 2021] I'm joining the University of Pittsburgh in the Intelligent Systems Program under the supervision of Dr. Kayhan Batmanghelich in Fall 2021.

- [May 2021] I graduated with a Master's degree in Computer Science from the University of Florida. Go Gators!!

- [Apr 2021] Our work to balance the unmatched controlled samples by simulating treated samples using GAN, is accepted in the Journal of Computer Methods and Programs in Biomedicine Update.

- [Dec 2020] Our work to estimate the Propensity score by dimensionality reduction using an autoencoder, is accepted in the Journal of the American Medical Informatics Association.

- [Apr 2020] I'm joining DISL lab as a graduate assistant under the supervision of Prof. Mattia Prosperi and Prof. Jiang Bian.

- [Aug 2019] I'm moving to the US to join the Master's program in the department of Computer Science at the University of Florida in Fall 2019.

Publications

2025

Semantic Consistency-Based Uncertainty Quantification for Factuality in Radiology Report Generation

2024

Distributionally robust self-supervised learning for tabular data

Anatomy-specific Progression Classification in Chest Radiographs via Weakly Supervised Learning

Mammo-CLIP: A Vision Language Foundation Model to Enhance Data Efficiency and Robustness in Mammography

2023

Distilling BlackBox to Interpretable models for Efficient Transfer Learning

Dividing and Conquering a BlackBox to a Mixture of Interpretable Models: Route, Interpret, Repeat

2022

Anatomy-Guided Weakly-Supervised Abnormality Localization in Chest X-rays

DR-VIDAL: Doubly Robust Variational Information-theoretic Deep Adversarial Learning for Counterfactual Prediction and Treatment Effect Estimation

2021

Propensity score synthetic augmentation matching using generative adversarial networks (PSSAM-GAN)

Deep propensity network using a sparse autoencoder for estimation of treatment effects

Academic Projects

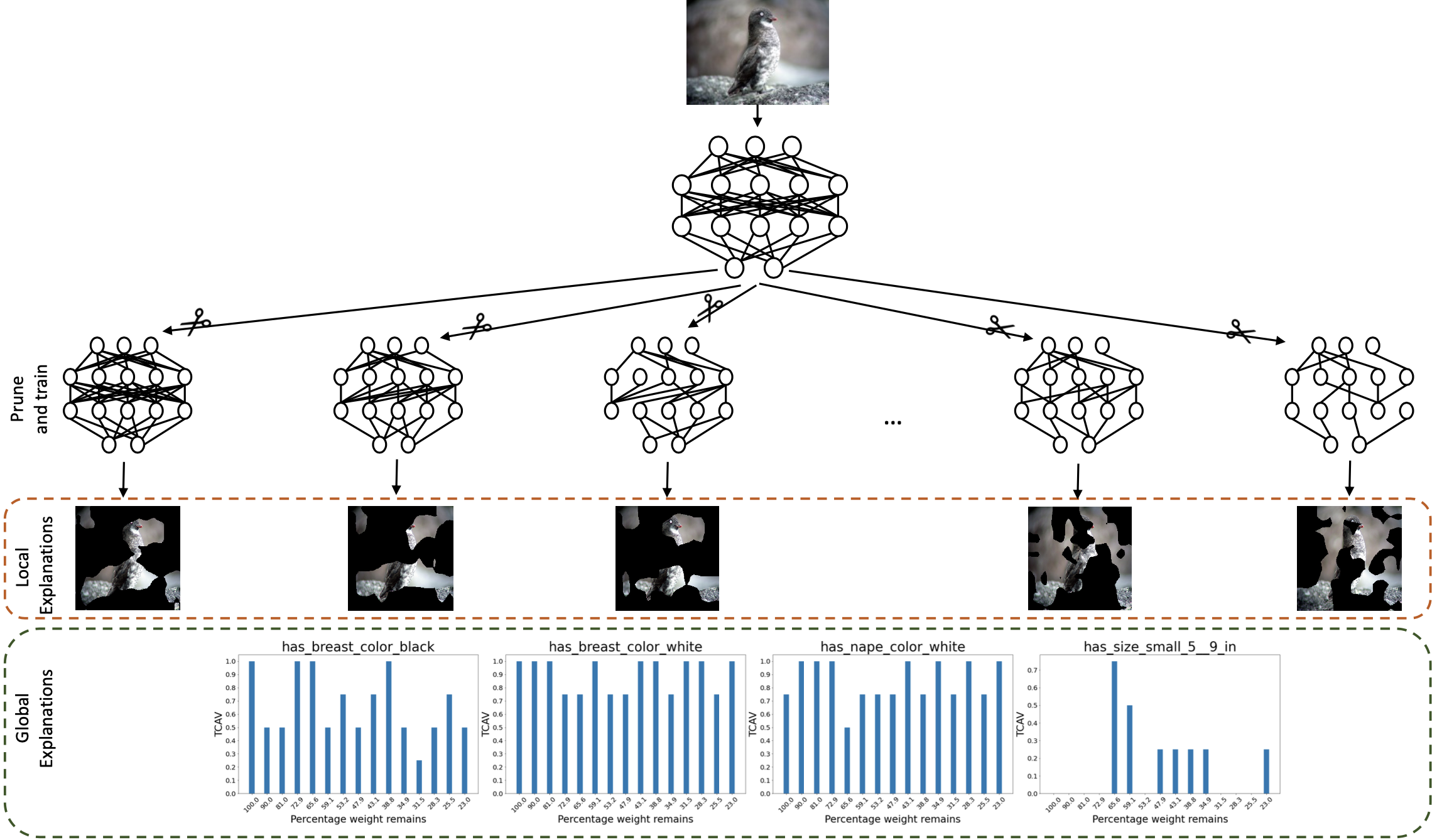

Explaining why Lottery Ticket Hypothesis Works or Fails

For the CMU 16-824: Visual Learning and Recognition course at CMU, we studied the relationship between pruning and explainability. We validated if the explanations generated from the pruned network using Lottery ticket hypothesis (LTH) are consistent or not. Specifically we pruned a neural network using LTH. Next we generated and compared the local and global explanations using Grad-CAM and Concept activations respectively. I expanded the analysis to an arXiv paper.

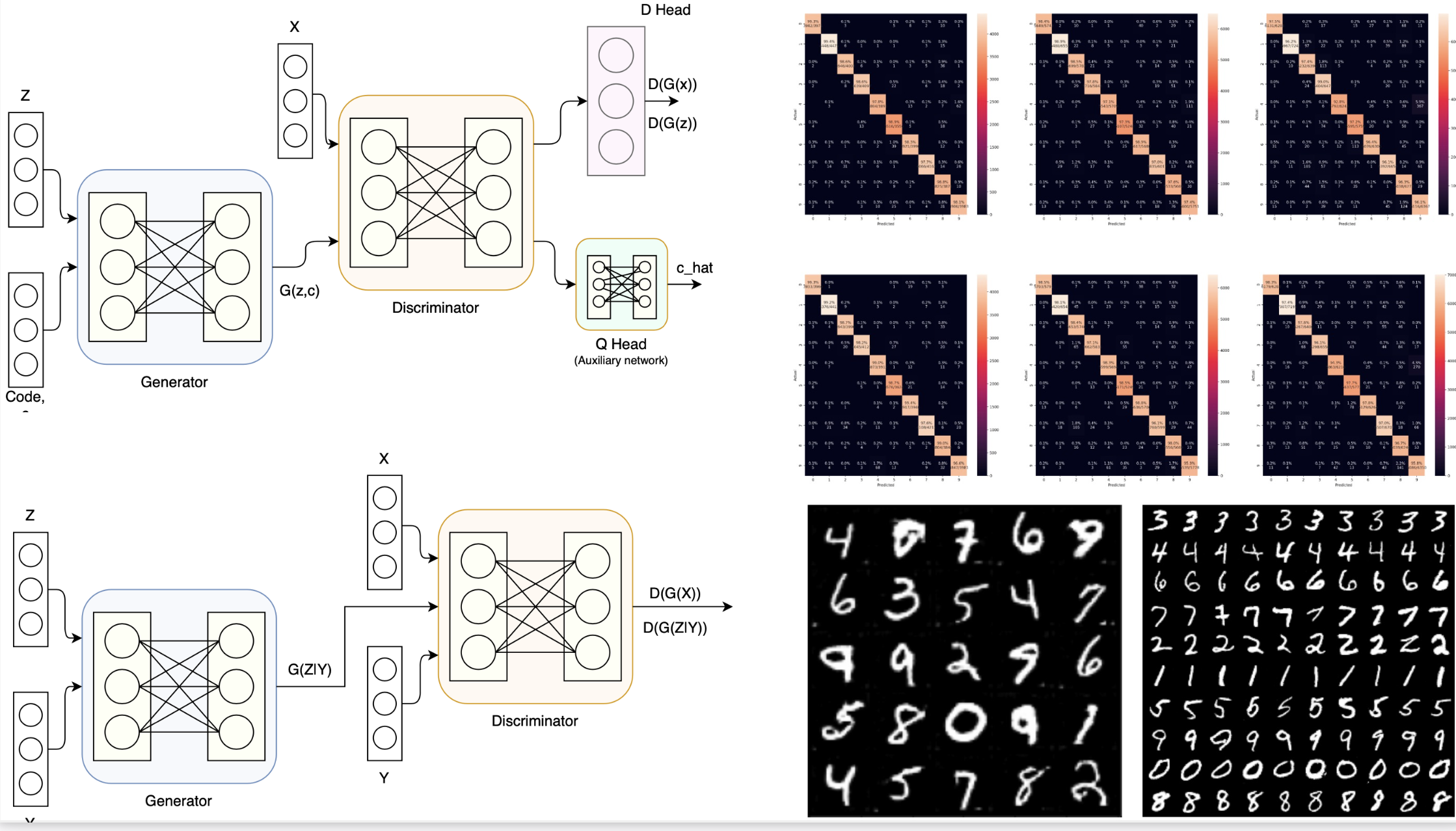

Efficient classification by data augmentation using CGAN and InfoGAN

For the CIS6930 - Deep Learning for Computer Graphics course at UF, we used two variants of GAN—(1) Conditional GAN and (2) InfoGAN—to augment the dataset and compare a classifier’s performance using a novel dataset augmentation algorithm. Our experiments showed that with fewer training samples from the original dataset and augmentation via generative models, the classifier achieved similar accuracy when trained from scratch.

Deep Colorization

I created a CNN model to color grayscale face images for the CIS6930 - Deep Learning for Computer Graphics course while I was a Master's student at UF.

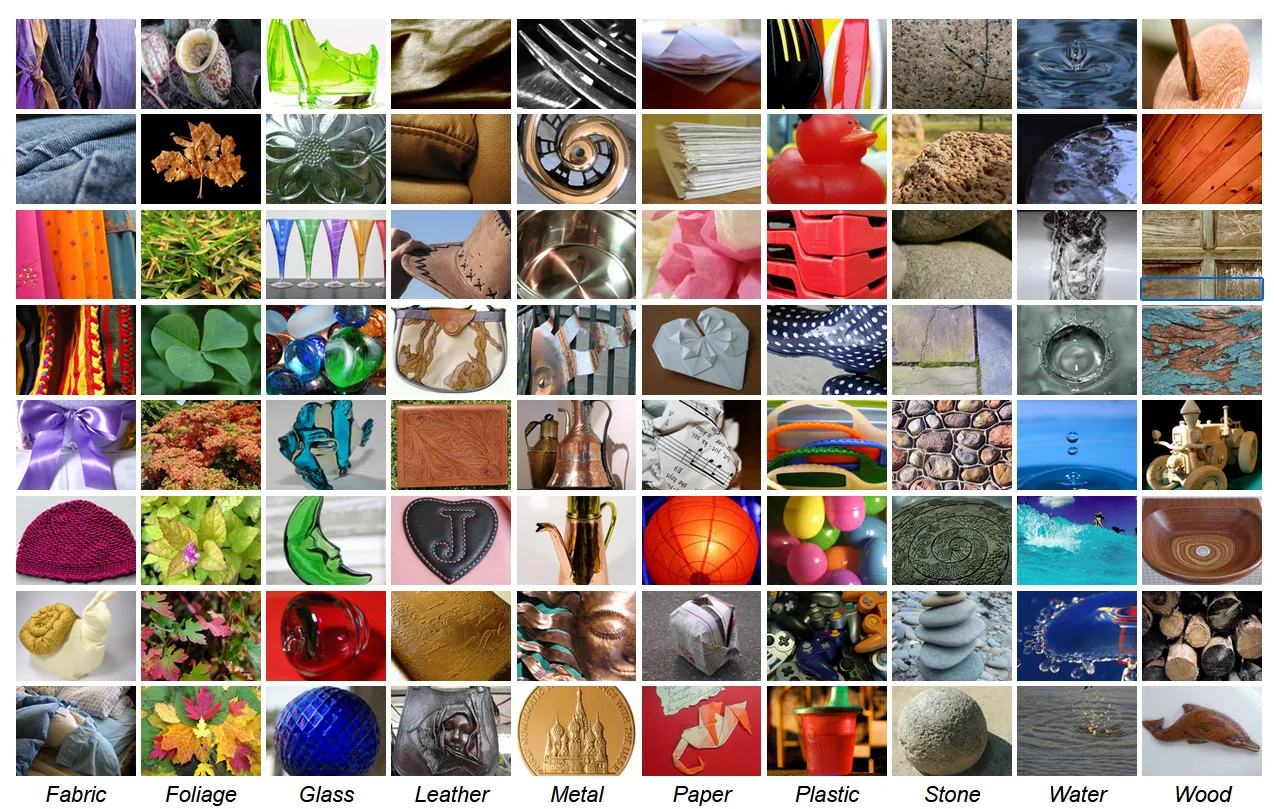

Deep Multitask Texture Classifier (MTL-TCNN)

As part of the independent research study in Spring 2020 (Feb–April), under Dr. Dapeng Wu, I developed a Deep Convolutional Multitask Neural Network (MTL-TCNN) to classify textures. We used an auxiliary head to detect normal images (non-textures) to regularize the main texture detector head.

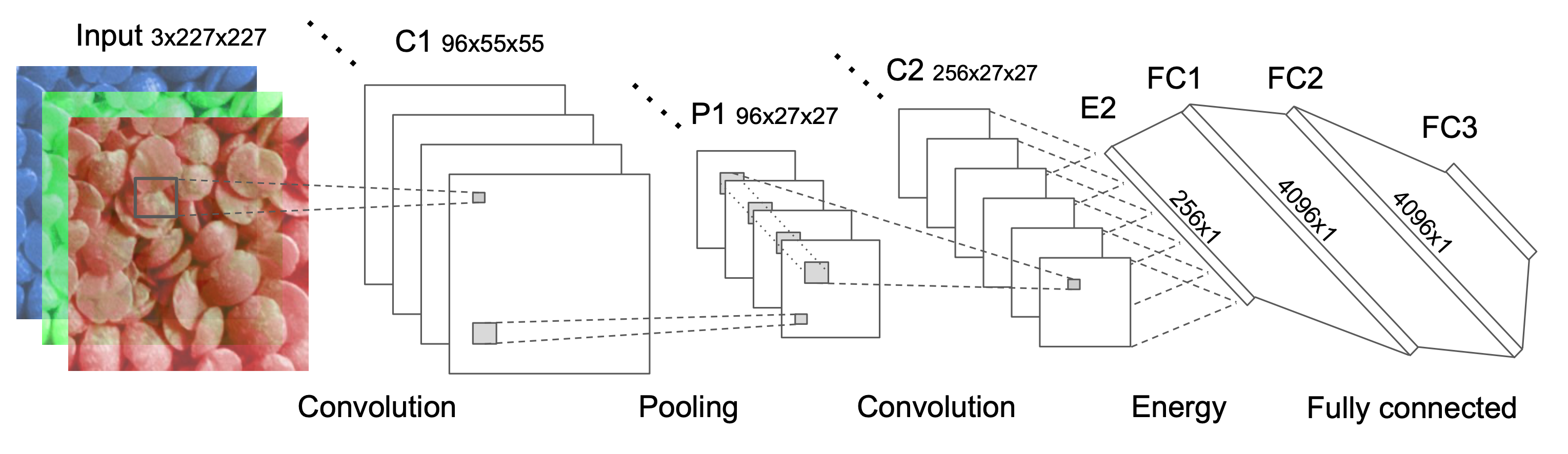

Implementation of TCNN3 paper

As a research assistant under Dr. Dapeng Wu, I implemented the TCNN3 architecture end-to-end (no pretraining) for the DTD dataset, following the paper Using filter banks in Convolutional Neural Networks for texture classification.

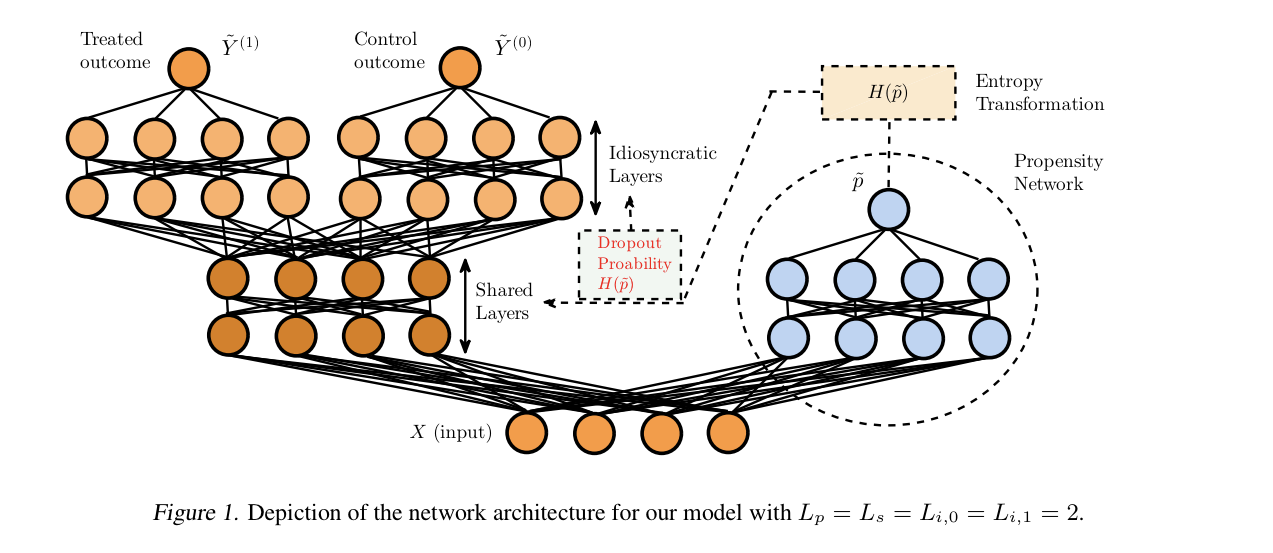

Implementation of Deep Counterfactual Networks with Propensity-Dropout

As a research assistant at DISL, I implemented the paper Deep Counterfactual Networks with Propensity-Dropout, which was subsequently used in my other research.

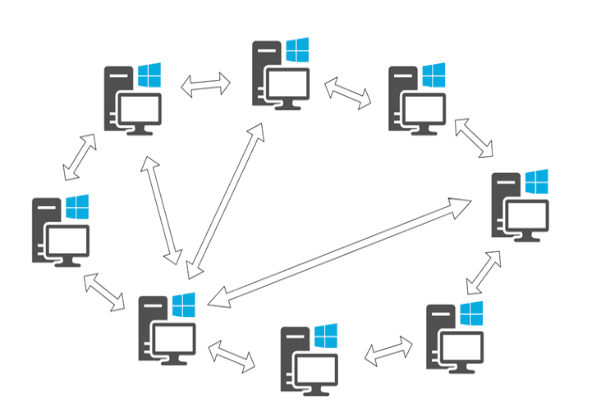

Peer to peer (p2p) network

I created this p2p network for Computer Networks (CNT5106C) while I was a Master's student at the University of Florida. It is a simplified peer-to-peer network where any number of peers can share any type of file among themselves. Implemented in Java.

Academic Service

Conference reviewer

- BMVC 2026

- ECCV 2026

- WACV 2025

- ICCV 2025

- ICML 2025

- ICLR 2024-2026

- AAAI 2024-2026

- NeurIPS 2023-2025

- AISTATS 2025

- MICCAI 2024-2026

- CVPR 2024-2026

- CLeaR 2024-2025

- ACM BCB 2022

Journal reviewer

- TMLR

- IEEE-TMI

- JBI

- MedIA

- JAMIA

- CMPB

- Biometrical Journal

- Information Fusion

Workshop reviewer

- SCSL, ICLR 2025

- GenAI4Health, NeurIPS 2024, 2025, 2026

- CRL, NeurIPS 2023

- SCIS, ICML 2023

- IMLH, ICML 2023

Teaching

Guest Lecturer

Teaching Assistant

Teaching Assistant

Talks

Invited Talk @ MedAI, Stanford University

Fall 2023, ISP AI Forum @ University of Pittsburgh [Slides]

Invited Talk @ ISP AI Forum, University of Pittsburgh

Fall 2023, ISP AI Forum @ University of Pittsburgh [Slides]

Tutorials

Tutorial on Variational Autoencoder (VAE)

Tutorial on Pearl's Do Calculus of causality

As a software engineer

Community service

Miscellaneous

Photography

Beyond research, I have a deep passion for landscape, travel, and nature photography. From sunrise in Yosemite to the quiet ridgelines of Kings Canyon, I find joy, solace and peace in capturing the light, scale, and drama of wild places. My photography reflects the same motivation that guide my research — searching for patterns in nature, structure in chaos, and beauty in complexity. You can view my landscape photos on my 500px profile.