TL;DR

We aim to apply mixture of interpretable models to chest-X-Rays and perform transfer learning efficiently to a new target domain with limited data.

26th International Conference on Medical Image Computing and Computer Assisted

Intervention, MICCAI 2023

(Early accept, top ~ 14%)

Also, in the 3rd Workshop on Interpretable Machine Learning in Healthcare (IMLH), ICML 2023

Problem Statement. We aim to build interpretable models which can be generalized to unseen domain.

Why not transferring the Blackbox directly to a new domain? Fine-tuning all or some layers of the Blackbox model on the target domain can solve this problem, but it requires a substantial amount of labeled data and be computationally expensive.

Why interpretable models? Radiologists follow fairly generalizable and comprehensible rules. Specifically, they search for patterns of changes in anatomy to read abnormality from an image and apply logical rules for specific diagnoses. This approach is transparent and closer to the concept based interpretable-by-design approach in AI.

What is a concept based interpretable model? Concept based model or technically Concept Bottleneck Models are a family of models where first the human understandable concepts are predicted from the given input (images) and then the class labels are predicted from the concepts. In this work, we assume to have the ground truth concepts either in the dataset (CUB200 or Awa2) or discovered from another dataset (HAM10000, SIIM-ISIC or MIMIC-CXR). Also, we predict the concepts from the pre-trained embedding of the Blackbox as shown in Posthoc Concept Bottleneck Models.

What is a human understandable concept? Human understandable concepts are high-level features which constitute the class label. For example, the stripes can be a human understandable concept, responsible for predicting zebra. In chest-x-rays, anatomical features like lower left lobe of lung can be another human understandable concept. For more details, refer to TCAV paper or Concept Bottleneck Models .

What is the research gap? In medical images, previous research uses TCAV to quantify the role of a concept on the final prediction, but the concept- based interpretable models have been mostly unexplored. Also, the common design choice amongst those methods relies on a single interpretable classifier to explain the entire dataset, cannot capture the diverse sample-specific explanations, and performs poorly than their Blackbox variants

What not using the MoIE (ICML 2023) directly? Due to class imbalance in large chest-X-Ray datasets, early inter- pretable models in MoIE (ICML 2023) tend to cover all samples with disease present while ignoring disease subgroups and pathological heterogeneity

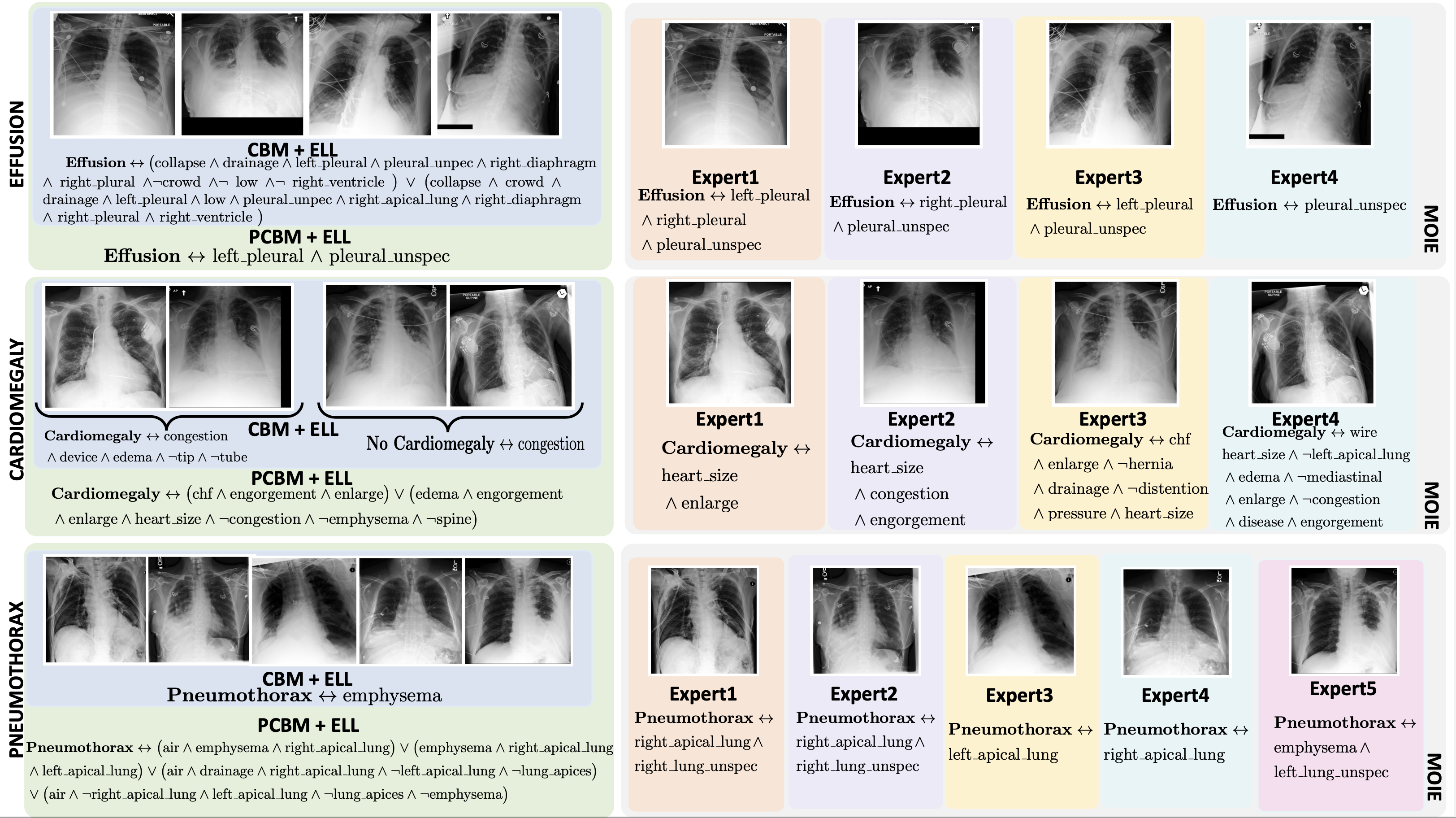

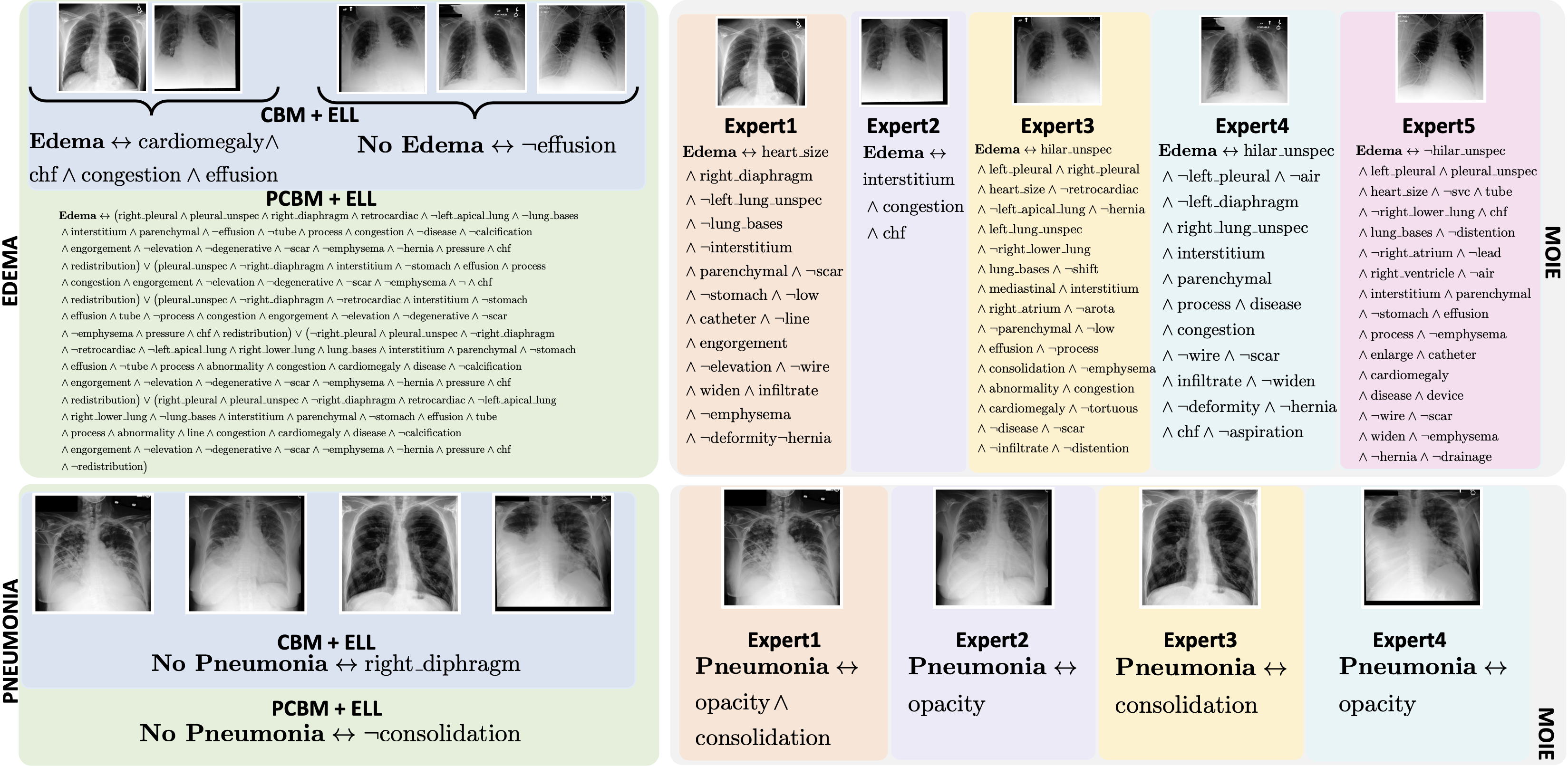

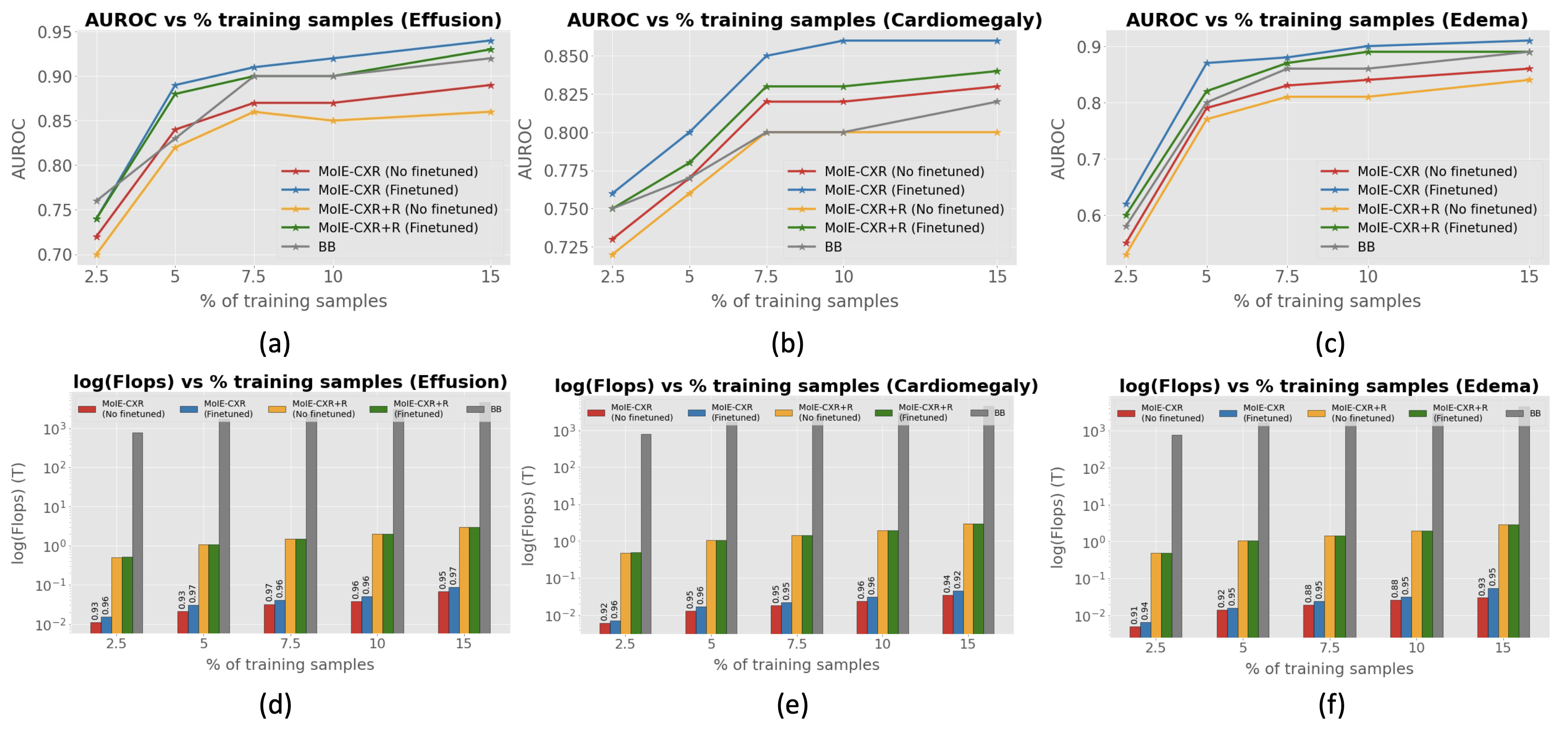

Our contribution. We propose a novel data-efficient interpretable method that can be transferred to an unseen domain. Our interpretable model is built upon human-interpretable concepts and can provide sample-specific expla- nations for diverse disease subtypes and pathological patterns. Following ICML 2023, we begin with a Blackbox in the source domain. Then we progressively extract a mixture of interpretable models from Blackbox. Our method includes a set of selectors routing the explain- able samples through the interpretable models. The interpretable models provide First-order-logic (FOL) explanations for the samples they cover. The remaining unexplained samples are routed through the residuals until they are covered by a successive interpretable model. We repeat the process until we cover a desired fraction of data. We address the problem of class imbalance in large chest-X-Ray datasets by estimating the class-stratified coverage from the total data coverage. We then finetune the interpretable models in the target domain. The target domain lacks concept-level annotation since they are expensive. Hence, we learn a concept detector in the target domain with a pseudo labeling approach and finetune the interpretable models. Our work is the first to apply concept-based methods to CXRs and transfer them between domains.

What is a FOL? FOL is a logical function that accepts predicates (concept presence/absent) as input and returns a True/False output being a logical expression of the predicates. The logical expression, which is a set of AND, OR, Negative, and parenthesis, can be written in the so-called Disjunctive Normal Form (DNF). DNF is a FOL logical formula composed of a disjunction (OR) of conjunctions (AND), known as the sum of products.

We follow our earlier work, MoIE to extract the interpretable models from the Blackbox. However, each experts in MoIE covered a specific percentage of data, defined by the coverage. To solve the class imbalance problem in large chest-X-Rays, we introduce the stratified coverage using which each expert in MoIE-CXR cover a specific subset of each class in the dataset.

To transfer MoIE-CXR to unseen domain, follow the algorithm below:

We perform experiments to show that 1) MoIE-CXR captures a diverse set of concepts, 2) the performance of the residuals degrades over successive iterations as they cover harder instances, 3) MoIE-CXR does not compromise the performance of the Blackbox, 4) MoIE-CXR achieves superior performances when transferring to an unseen domain. We extract MoIE-CXR from Blackbox using MIMIC-CXR dataset for the disease effusion, cardiomegaly, edema, pneumonia and pneumothorax. Finally, we transfer this MoIE-CXR to Stanford-CXR dataset for the diseases effusion, cardiomegaly and edema.

Baselines. We compare our methods to two concept-based baselines – 1) interpretable-by-design and 2) posthoc. The end-to-end CEMs and sequential CBMs serve as interpretable-by-design baselines. Similarly, PCBM and PCBM-h serve as post hoc baselines. The standard CBM and PCBM models do not show how the concepts are composed to make the label prediction. So, we create CBM + ELL, PCBM + ELL and PCBM-h + ELL by using the identical g of MOIE, as a replacement for the standard classifiers of CBM and PCBM.

To view the FOL explanation for each sample per expert for different datasets, go to the explanations directory in our official repo. All the explanations are stored in separate csv files for each expert for different datasets.

@InProceedings{10.1007/978-3-031-43895-0_59,

author="Ghosh, Shantanu

and Yu, Ke

and Batmanghelich, Kayhan",

editor="Greenspan, Hayit

and Madabhushi, Anant

and Mousavi, Parvin

and Salcudean, Septimiu

and Duncan, James

and Syeda-Mahmood, Tanveer

and Taylor, Russell",

title="Distilling BlackBox to Interpretable Models for Efficient Transfer Learning",

booktitle="Medical Image Computing and Computer Assisted Intervention -- MICCAI 2023",

year="2023",

publisher="Springer Nature Switzerland",

address="Cham",

pages="628--638",

abstract="Building generalizable AI models is one of the primary challenges in the healthcare domain. While radiologists rely on generalizable descriptive rules of abnormality, Neural Network (NN) models suffer even with a slight shift in input distribution (e.g., scanner type). Fine-tuning a model to transfer knowledge from one domain to another requires a significant amount of labeled data in the target domain. In this paper, we develop an interpretable model that can be efficiently fine-tuned to an unseen target domain with minimal computational cost. We assume the interpretable component of NN to be approximately domain-invariant. However, interpretable models typically underperform compared to their Blackbox (BB) variants. We start with a BB in the source domain and distill it into a mixture of shallow interpretable models using human-understandable concepts. As each interpretable model covers a subset of data, a mixture of interpretable models achieves comparable performance as BB. Further, we use the pseudo-labeling technique from semi-supervised learning (SSL) to learn the concept classifier in the target domain, followed by fine-tuning the interpretable models in the target domain. We evaluate our model using a real-life large-scale chest-X-ray (CXR) classification dataset. The code is available at: https://github.com/batmanlab/MICCAI-2023-Route-interpret-repeat-CXRs.",

isbn="978-3-031-43895-0"

}

@inproceedings{ghosh2023bridging,

title={Bridging the Gap: From Post Hoc Explanations to Inherently Interpretable Models for Medical Imaging},

author={Ghosh, Shantanu and Yu, Ke and Arabshahi, Forough and Batmanghelich, Kayhan},

booktitle={ICML 2023: Workshop on Interpretable Machine Learning in Healthcare},

year={2023}

}